XR architecture

Unity supports XRAn umbrella term encompassing Virtual Reality (VR), Augmented Reality (AR) and Mixed Reality (MR) applications. Devices supporting these forms of interactive applications can be referred to as XR devices. More info

See in Glossary development through its plug-inA set of code created outside of Unity that creates functionality in Unity. There are two kinds of plug-ins you can use in Unity: Managed plug-ins (managed .NET assemblies created with tools like Visual Studio) and Native plug-ins (platform-specific native code libraries). Glossary development through its plug-inA set of code created outside of Unity that creates functionality in Unity. There are two kinds of plug-ins you can use in Unity: Managed plug-ins (managed .NET assemblies created with tools like Visual Studio) and Native plug-ins (platform-specific native code libraries). More info

See in Glossary framework and a set of feature and tool packages. Go to the XR Plug-in Management category in Project SettingsA broad collection of settings which allow you to configure how Physics, Audio, Networking, Graphics, Input and many other areas of your project behave. Glossary framework and a set of feature and tool packages. Go to the XR Plug-in Management category in Project SettingsA broad collection of settings which allow you to configure how Physics, Audio, Networking, Graphics, Input and many other areas of your project behave. More info

See in Glossary to enable XR support in a Unity project and to choose the plug-ins for the XR platforms that your project supports. Use the Unity Package Manager to install the additional feature packages.

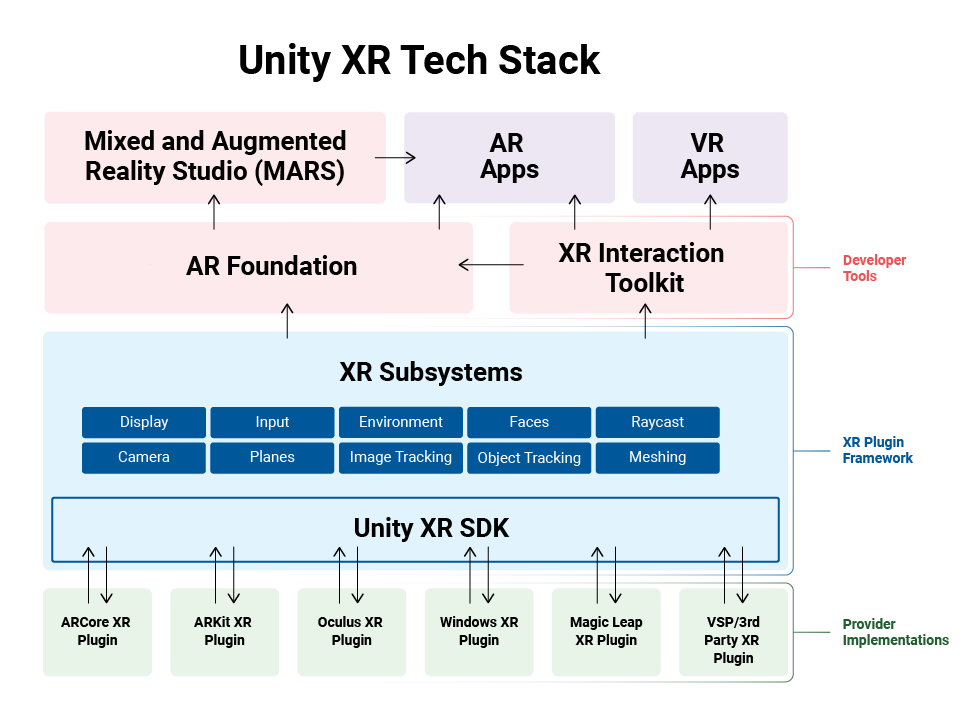

The following diagram illustrates the current Unity XR plug-in framework structure and how it works with platform provider implementations:

XR subsystems define a common interface for XR features. XR plug-ins implement these subsystem interfaces to provide data to the subsystem at runtime. Your XR application can access data for an XR feature through Unity Engine and package APIs.

XR provider plug-in framework

An XR provider plug-in is a Unity plug-in that supports one or more XR device platforms. For example, the ARCore plugin supports the Android ARAugmented Reality More info

See in Glossary platform on hand-held Android devices, while the OpenXR plug-in supports several XR devices on multiple operating systems.

An XR provider plug-in implements interfaces defined by the Unity XR SDK. These interfaces are called subsystems. A plug-in that implements one or more subsystems is called a provider plug-in. Typically, a provider plug-in uses the device platform’s native libraries to implement the Unity interfaces for their devices.

Unity uses subsystem interfaces to communicate with providers for various platforms, powering the XR features of your application. Because of these interfaces, you can reuse the same feature code in your application across all XR devices that have a provider for that feature.

Subsystems

XR subsystems give you access to XR features in your Unity app. The Unity XR SDK defines a common interface for subsystems so that all provider plug-ins implementing a feature generally work the same way in your app. Often you can change the active provider and rebuild your app to run on a different XR platform, as long as the platforms are largely similar.

The Unity Engine defines a set of fundamental XR subsystems. Unity packages can provide additional subsystems. For example, the AR Subsystems package contains many of the AR-specific subsystem interfaces.

The subsystems defined in the Unity Engine include:

| Subsystem | Description |

|---|---|

| Display | Stereo XR display. |

| Input | Spatial tracking and controller input. |

| Meshing | Generate 3D meshes from environment scans. |

Note: Unity applications typically do not interact with subsystems directly. Instead, the features provided by a subsystem are exposed to the application through an XR plug-in or package. For example, the ARMeshManager component in the AR Foundation package lets you add the meshes created by the Meshing subsystem to a sceneA Scene contains the environments and menus of your game. Think of each unique Scene file as a unique level. In each Scene, you place your environments, obstacles, and decorations, essentially designing and building your game in pieces. ARMeshManager component in the AR Foundation package lets you add the meshes created by the Meshing subsystem to a sceneA Scene contains the environments and menus of your game. Think of each unique Scene file as a unique level. In each Scene, you place your environments, obstacles, and decorations, essentially designing and building your game in pieces. More info

See in Glossary.