Frame Debugger

The Frame Debugger lets you freeze playback for a running game on a particular frame and view the individual draw calls that are used to render that frame. As well as listing the drawcalls, the debugger also lets you step through them one-by-one so you can see in great detail how the SceneA Scene contains the environments and menus of your game. Think of each unique Scene file as a unique level. In each Scene, you place your environments, obstacles, and decorations, essentially designing and building your game in pieces. More info

See in Glossary is constructed from its graphical elements.

Using the Frame Debugger

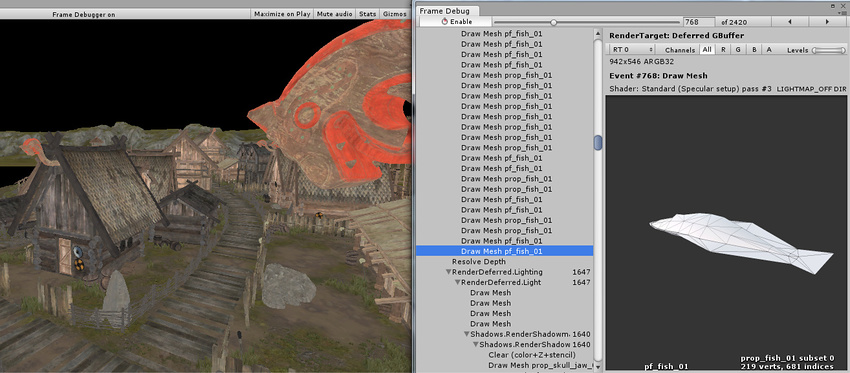

The Frame Debugger window (menu: Window > Analysis > Frame Debugger) shows the drawcall information and lets you control the “playback” of the frame under construction.

The main list shows the sequence of drawcalls (and other events like framebuffer clear) in the form of a hierarchy that identifies where they originated from. The panel to the right of the list gives further information about the drawcall such as the geometry details and the shaderA small script that contains the mathematical calculations and algorithms for calculating the Color of each pixel rendered, based on the lighting input and the Material configuration. More info

See in Glossary used for renderingThe process of drawing graphics to the screen (or to a render texture). By default, the main camera in Unity renders its view to the screen. More info

See in Glossary.

Clicking on an item from the list will show the Scene (in the Game view) as it appears up to and including that drawcall. The left and right arrow buttons in the toolbarA row of buttons and basic controls at the top of the Unity Editor that allows you to interact with the Editor in various ways (e.g. scaling, translation). More info

See in Glossary move forward and backward in the list by a single step and you can also use the arrow keys to the same effect. Additionally, the slider at the top of the window lets you “scrub” rapidly through the drawcalls to locate an item of interest quickly. Where a drawcall corresponds to the geometry of a GameObjectThe fundamental object in Unity scenes, which can represent characters, props, scenery, cameras, waypoints, and more. A GameObject’s functionality is defined by the Components attached to it. More info

See in Glossary, that object will be highlighted in the main Hierarchy panel to assist identification.

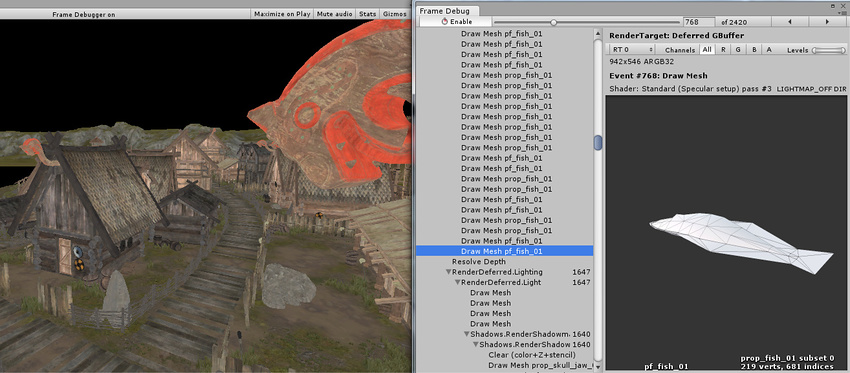

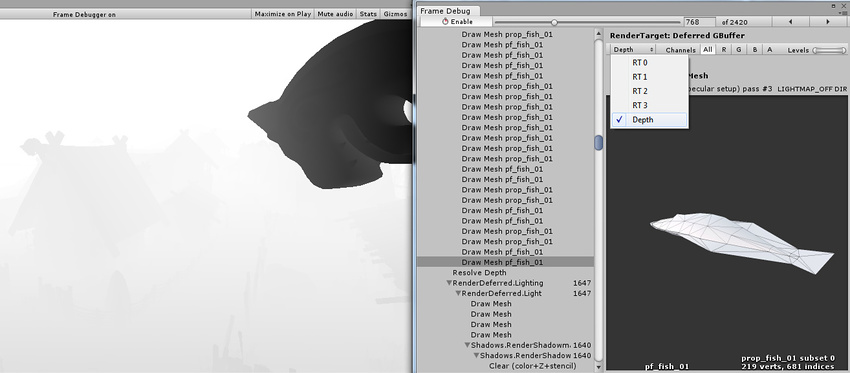

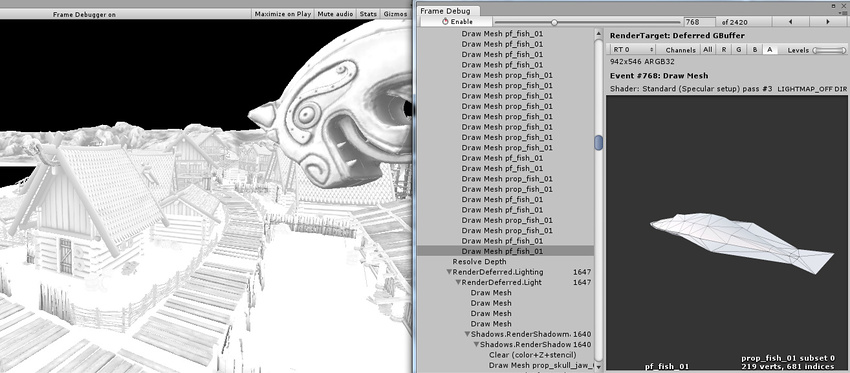

If rendering happens into a RenderTexture at the selected draw call, then contents of that RenderTexture are displayed in the Game view. This is useful for inspecting how various off-screen render targets are built up, for example diffuse g-buffer in deferred shadingA rendering path in the Built-in Render Pipeline that places no limit on the number of Lights that can affect a GameObject. All Lights are evaluated per-pixel, which means that they all interact correctly with normal maps and so on. Additionally, all Lights can have cookies and shadows. More info

See in Glossary:

Or looking at how the shadow maps are rendered:

Remote Frame Debugger

To use Frame Debugger remotely, the player has to support multithreaded rendering (for example, WebGL doesn’t support it, thus frame debugger cannot be run on it), most of the Unity platforms support it, secondly you have to check ‘Development BuildA development build includes debug symbols and enables the Profiler. More info

See in Glossary’ when building.

Note for Desktop platforms: be sure to check ‘Run In Background’ option before building, otherwise, when you’ll connect Frame Debugger to player, it won’t reflect any rendering changes until it has focus, assuming you’re running both Editor and the player on the same machine, when you’ll control Frame Debugger in Editor, you’ll take the focus from the player.

Quick Start:

- From Editor build the project to target platform (select Development Player)

- Run the player

- Go back to the Editor

- Open Frame Debugger window

- Click Active ProfilerA window that helps you to optimize your game. It shows how much time is spent in the various areas of your game. For example, it can report the percentage of time spent rendering, animating or in your game logic. More info

See in Glossary, select the player - Click Enable, frame debugger should enable on the player

Render target display options

At the top of the information panel is a toolbar which lets you isolate the red, green, blue and alpha channels for the current state of the Game view. Similarly, you can isolate areas of the view according to brightness levels using the Levels slider to the right of these channel buttons. These are only enabled when rendering into a RenderTexture.

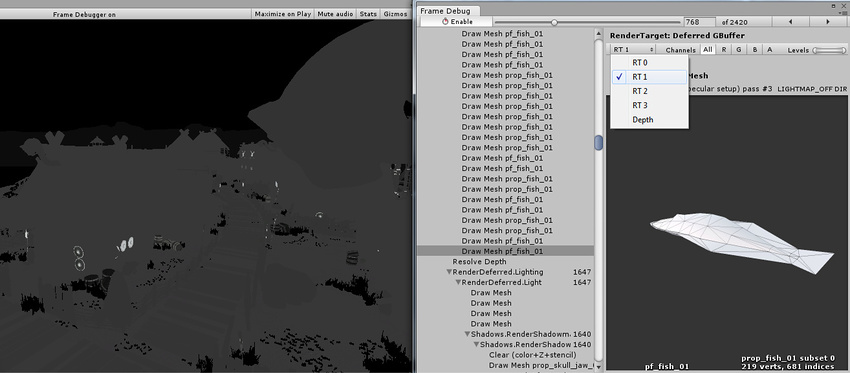

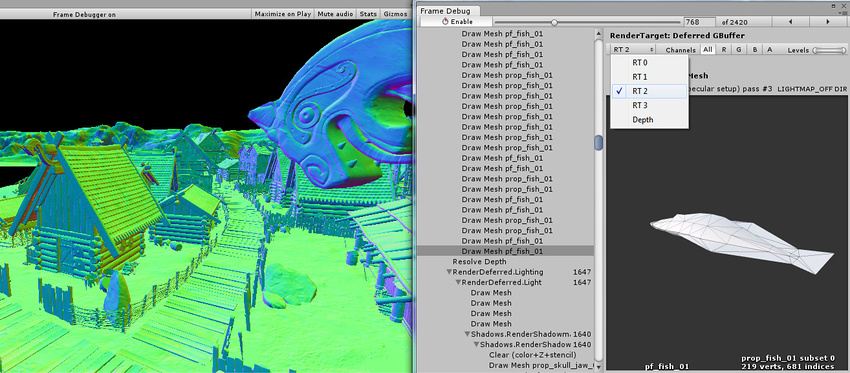

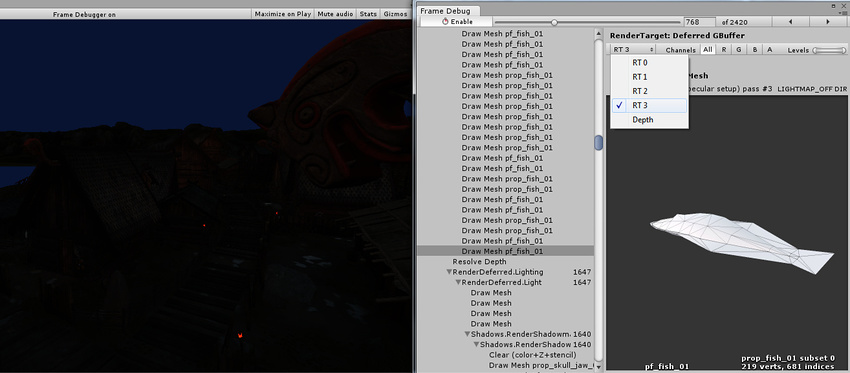

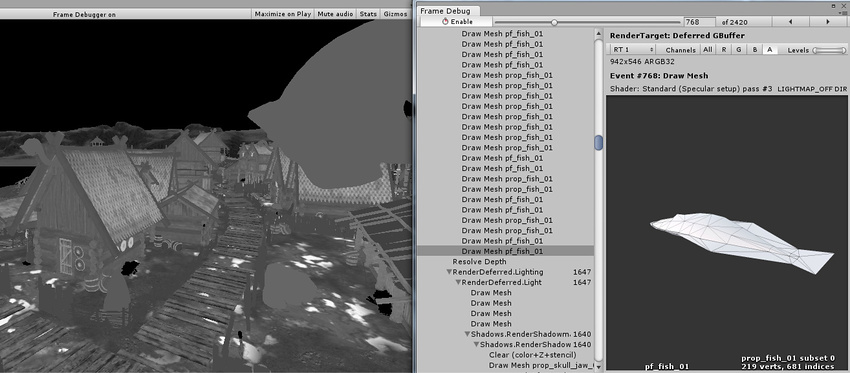

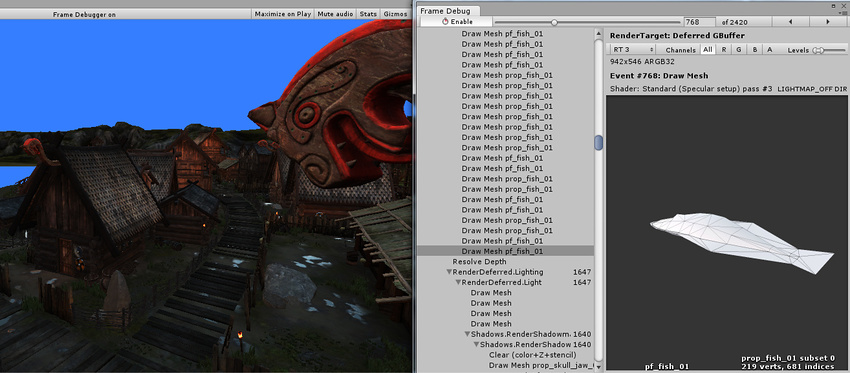

When rendering into multiple render targets at once you can select which one to display in the game view. Shown here are the diffuse, specular, normals and emission/indirect lighting buffers in 5.0 deferred shading mode, respectively:

Additionally, you can see the depth bufferA memory store that holds the z-value depth of each pixel in an image, where the z-value is the depth for each rendered pixel from the projection plane. More info

See in Glossary contents by picking “Depth” from the dropdown:

By isolating alpha channel of the render textureA special type of Texture that is created and updated at runtime. To use them, first create a new Render Texture and designate one of your Cameras to render into it. Then you can use the Render Texture in a Material just like a regular Texture. More info

See in Glossary, you can see occlusion (stored in RT0 alpha) and smoothness (stored in RT1 alpha) of the deferred g-buffer:

The emission and ambient/indirect lighting in this Scene is very dark; we can make it more visible by changing the Levels slider:

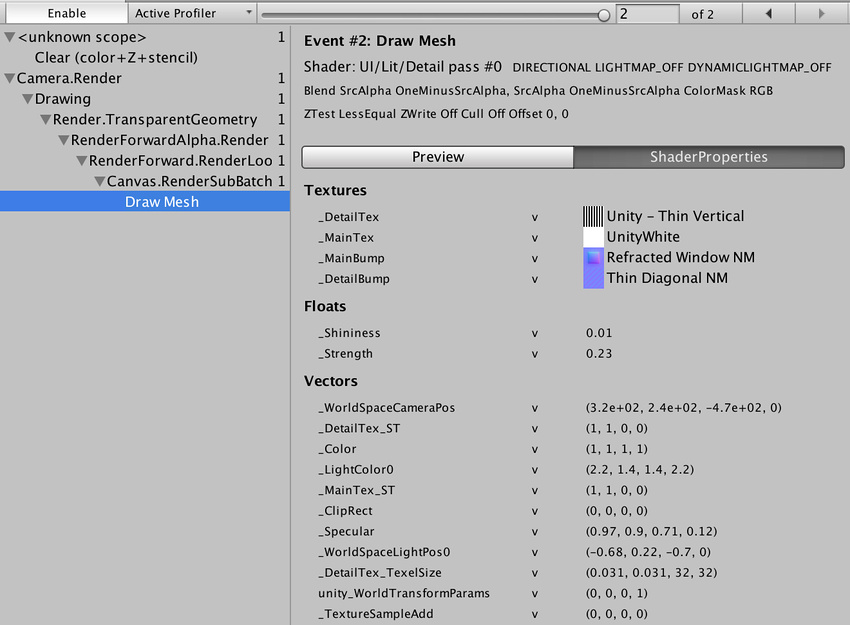

Viewing shader property values

For draw calls, the Frame Debugger can also show shader property values that are used. Click on “Shader Properties” tab to show them:

For each property, the value is shown, as well as which shader stages

it was used in (vertex, fragment, geometry, hull, domain). Note that when using OpenGL (e.g. on a Mac), all shader properties are considered to be part of vertex shaderA program that runs on each vertex of a 3D model when the model is being rendered. More info

See in Glossary stage, due to how GLSL shaders work.

In the editor, thumbnails for textures are displayed too, and clicking

on them highlights the textures in the project windowA window that shows the contents of your Assets folder (Project tab) More info

See in Glossary.

Alternative frame debugging techniques

You could also use external tools to debug rendering. Editor integration exists for easily launching RenderDoc to inspect the Scene or Game view in the Editor.

You can also build a standalone player and run it through any of the following:

- Visual Studio graphics debugger

- Intel GPA

- RenderDoc

- NVIDIA NSight

- AMD GPU PerfStudio

- Xcode GPU Frame Capture

- GPU Driver Instruments

When you’ve done this, capture a frame of rendering, then step through the draw calls and other rendering events to see what’s going on. This is a very powerful approach, because these tools can provide you with a lot of information to really drill down.