Warning

Warning: Unity Simulation is deprecated as of December 2023, and is no longer available.

Background

CPU and GPU Scheduling

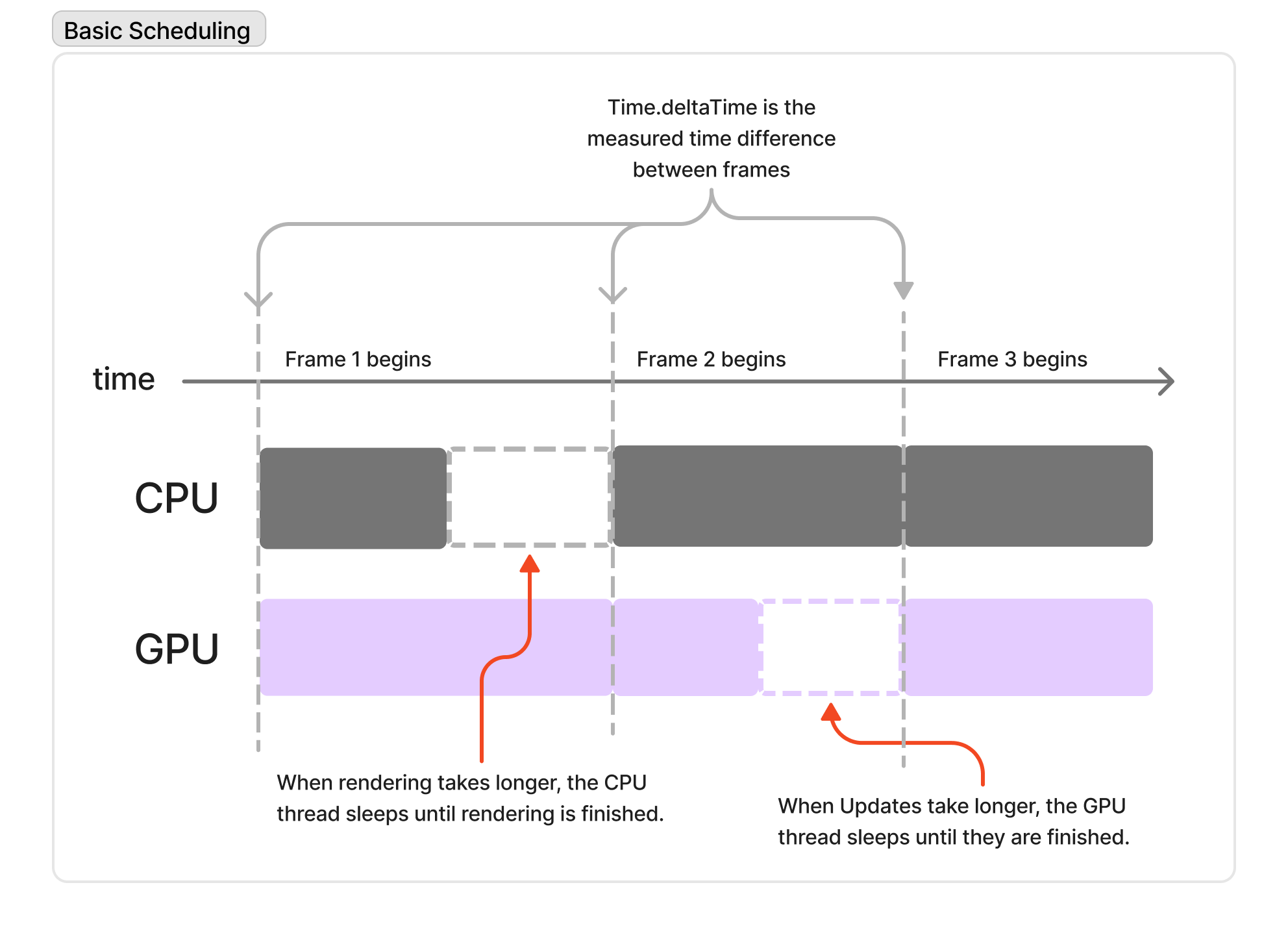

Unity, like most game engines, was originally built to enable game developers, indie and AAA alike, to build games of substantially varied scope, running on substantially varied hardware. To keep game framerates stable under such a broad possibility space - both in program complexity and hardware specifications - the engine must schedule the work meant to run on the CPU and GPU in a highly flexible manner. From a high level, work can be separated into two major categories: CPU work, running on the main thread, and GPU work, which is dispatched to an internally managed rendering thread via Engine API's. Work cycles are divided into Frames, where a Frame is a single image, rendered to the main viewport. When CPU work takes longer than GPU work - perhaps a game has many agents in the Scene with complex logic required each Frame - the rendering thread will sleep to allow the main thread to catch up. In the opposite case, where the GPU work takes longer - often the case for hardware with older graphics cards or with too many fancy rendering features enabled - the main thread will sleep to allow the renderer to finish.

A very coarse view of how work is scheduled in Unity's game loop.

The flexibility of this reactive, real time scheduling model allows game developers to adjust the rendering fidelity of their games on a per-instance basis in order to maximize framerate and graphical fidelity across many different platforms.

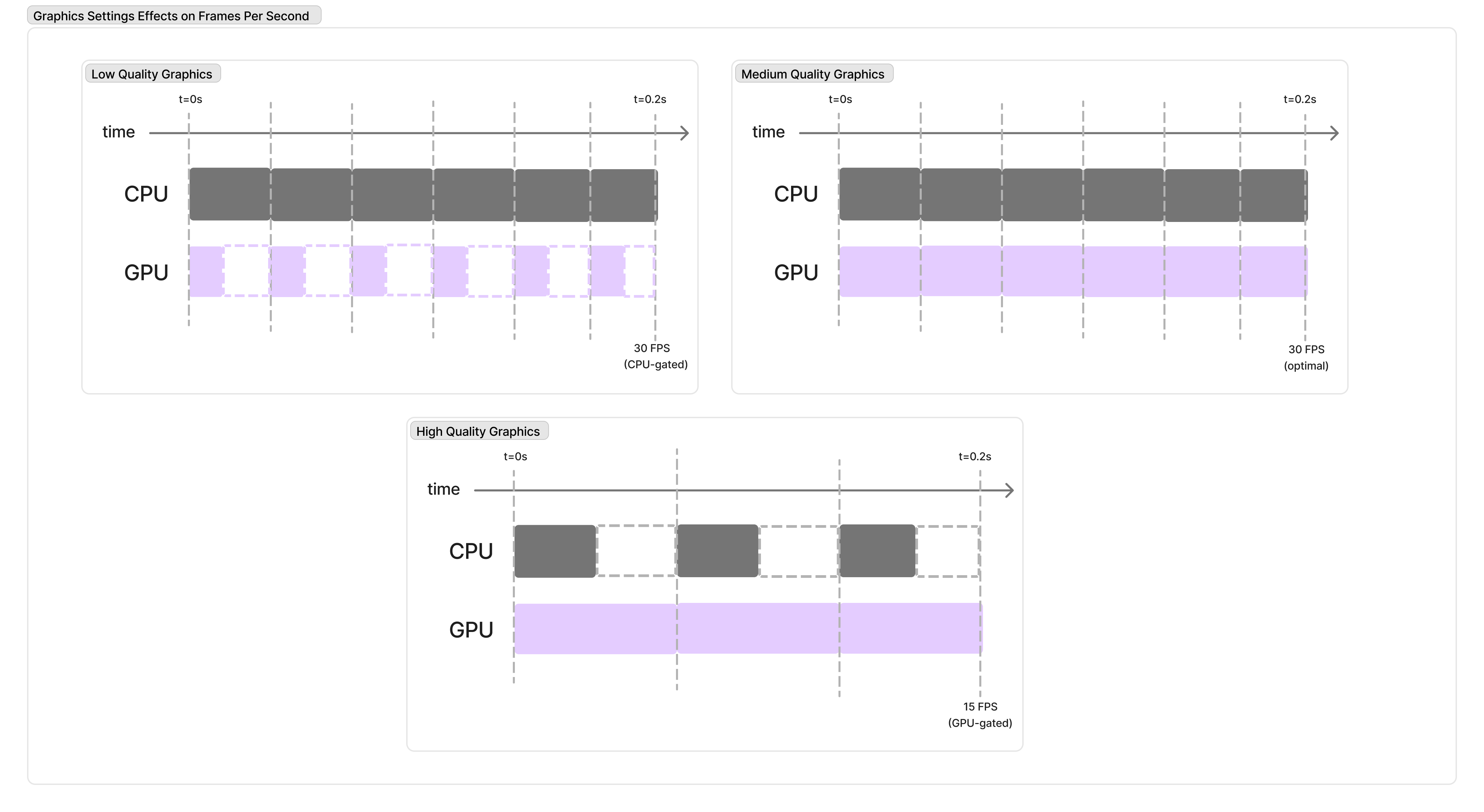

While CPU work cycle stays roughly the same, adjustments to graphical fidelity can allow a game to make the best use of its available resources. By keeping the work times roughly equivalent, much less time and compute is wasted waiting for the other thread to catch up.

While great for keeping a game running smoothly, this sort of scheduling is not ideal for simulation, which will become apparent once we examine how Physics fits into this loop...

FixedUpdate (Physics) Scheduling

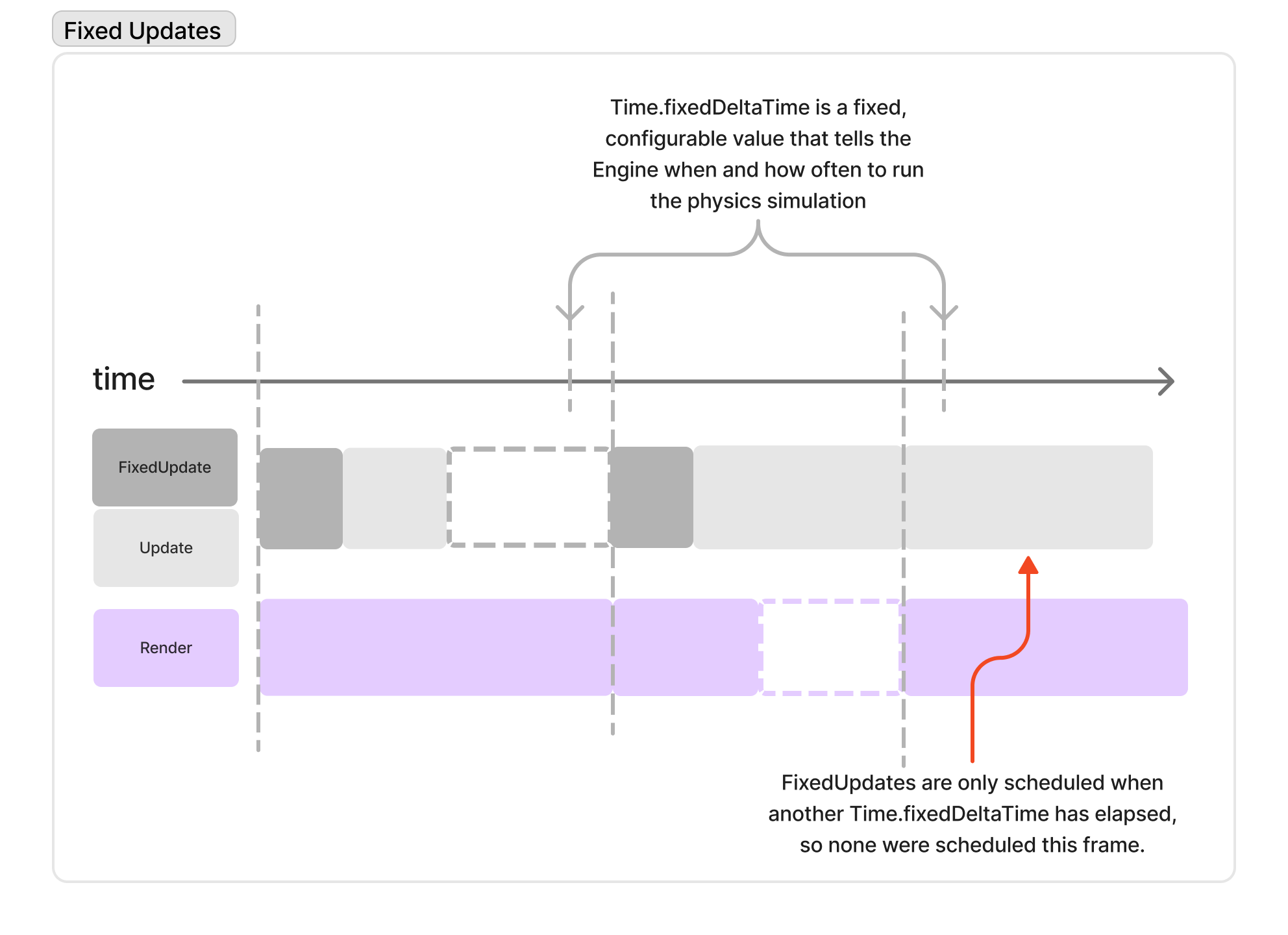

If we examine the work being done on the CPU thread a littler closer, we find that we can again separate into two major categories: game and physics. Any code executed inside the FixedUpdate function is de facto physics logic. Code not executed inside FixedUpdate is therefor game logic, or "non-physics."

The engine will attempt to schedule FixedUpdate invocating at a fixed frequency, independent of an application's framerate. Every time the fixed period elapses, a FixedUpdate is scheduled at the beginning of the next frame.

In the majority of games, nearly all logic and state updates are executed inside the Update function. There's not much point to doing extra work in between frames - during FixedUpdate - because what gets rendered to screen is all that matters to the end user, and most of the games systems do not rely on physics simulation to run. So in games, it typically does not matter how many FixedUpdates happen per frame; a game may even have frames where the physics simulation was not updated at all, because FixedUpdate wasn't called for that Update cycle. This is typically fine and the game logic is ambivalent to whether 0 or many FixedUpdates occured.

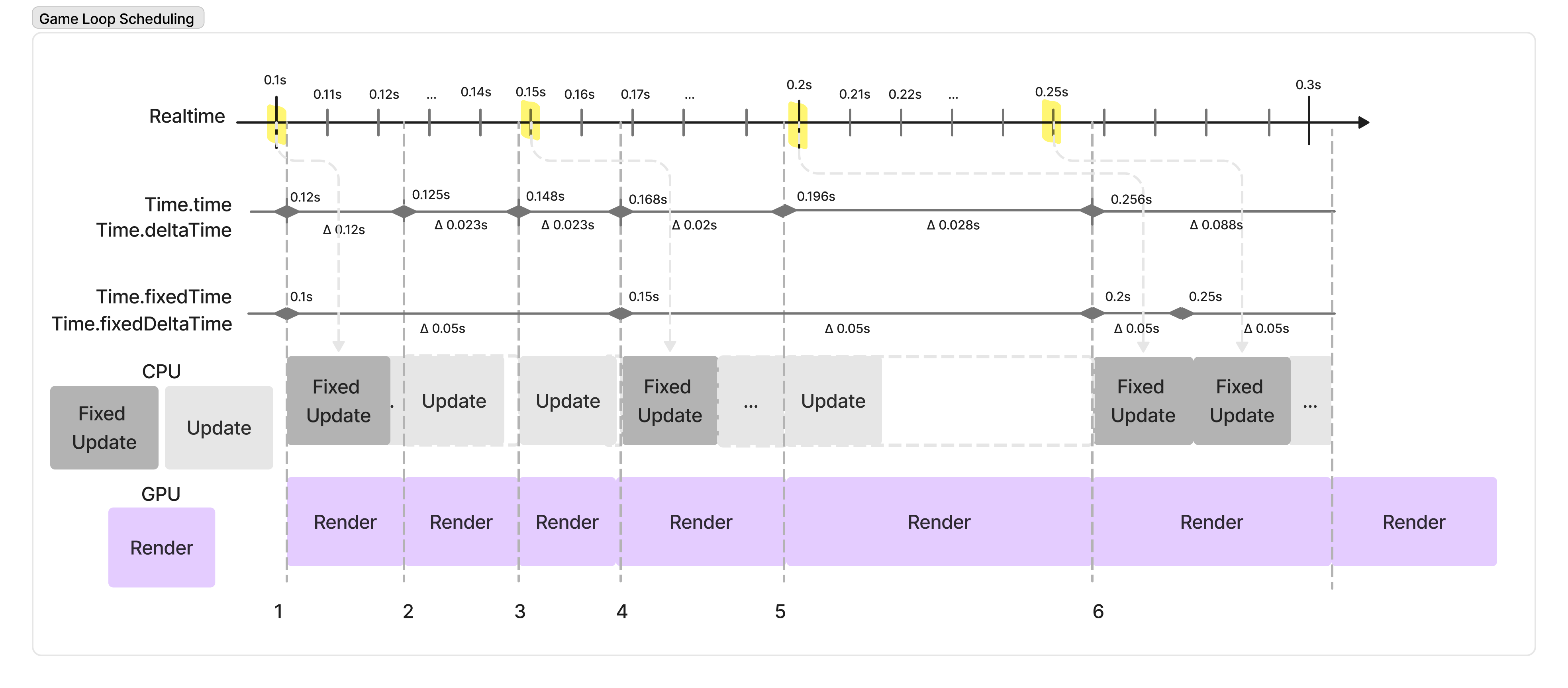

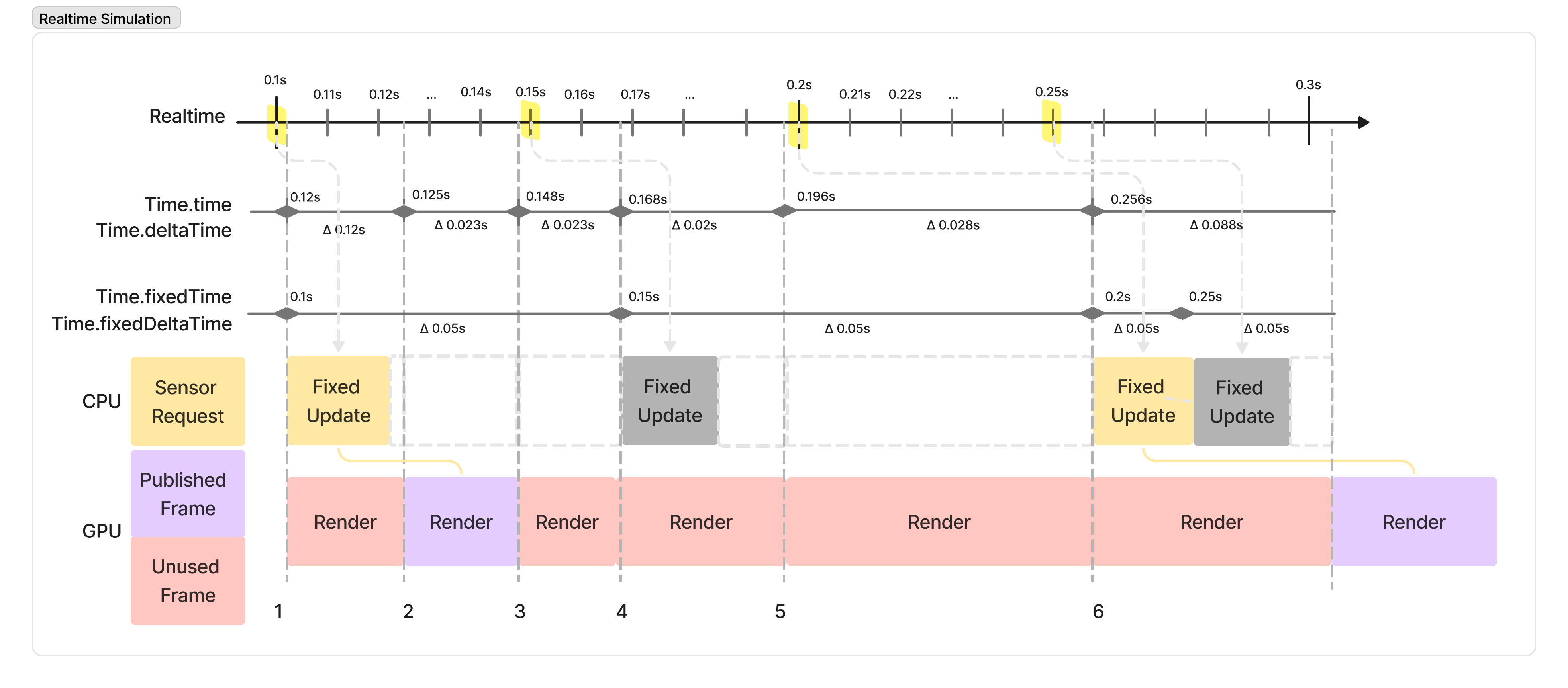

In simulation, it is not fine. If physics hasn't updated, what gets rendered to screen is redundant information because the state relevant to the simulation is likely tied to the physics simulation. Frames in which no FixedUpdates occur are useless to us. This mandates that the simulation developer take control of the simulated clock and make some important adjustments to how Updates and FixedUpdates are scheduled. Consider the following hypothetical timeline:

Variability of the size of GPU/CPU work have been exaggerated for the purposes of the illustration, but it is not uncommon for the game loop to render multiple frame updates without a physics update or apply multiple physics updates over a single frame.

In this scenario, we can see that over the course of 6 rendered frames, physics was updated only four times. Physics updates cannot be propagated to the renderer mid-frame, so every time Time.fixedDeltaTime elapses - highlighted on the timeline in yellow - a FixedUpdate is scheduled once the current frame finishes rendering. Due to Frames 2 and 3 rendering quickly, only one FixedUpdate is triggered across their combined durations. Frame 5, on the other hand, goes long and results in two updates being scheduled for execution before Frame 6 renders.

Now, let's assume we are building a robotics simulation. In our simulation, we do not have much logic to execute in Update because we only care to capture or otherwise respond to changes in the physics simulation, and these changes only occur on FixedUpdate. So, in our simulation we do almost no work in Update, and we want to simulate a camera which runs at 10 Hz. Say we are using a sensor implementation that utilized ASyncGPUReadback so we don't disrupt the GPU thread, and we queue up our requests for data whenever 100ms has elapsed in our simulation. Then, without using Clock Management our simulation timeline looks something like this (for ease of readability, we'll assume Updates are negligibly small):

When simulating in real-time - i.e. with the simulation keeping pace with the "wall clock" - unless your sensor is capturing and publishing data every frame, most work done on the GPU is simply rendered to screen and then discarded.

The majority of the time for which the sim is running, it is producing output that will not be consumed by our external processes. Astute diagram-readers may even notice that the request we dispatch in Frame 6 will unfortunately receive data from simulated time t = 0.25s due to Unity's scheduler stacking another FixedUpdate into that Frame.

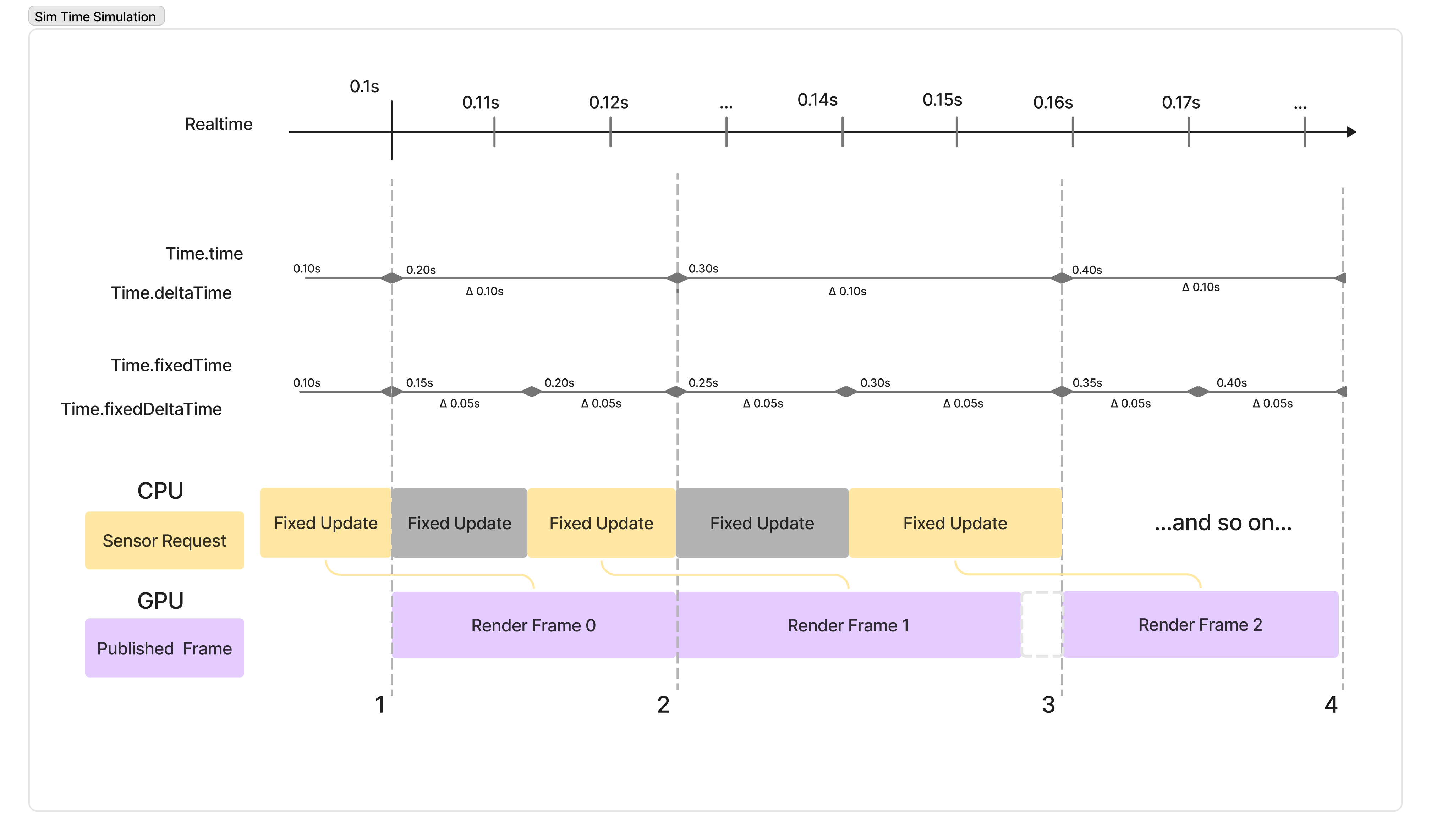

Let's examine this same scenario with our clock management Components configured such that, after every sensor message, we simulate 100ms of time passing even though much less real time has elapsed. To achieve this behavior, one would need only to add a single Component from our package called the TimeStepScheduler (more on this later) to the Scene, and set the TimeStep parameter to 0.1 seconds. This will ensure Unity always steps time forward by exactly 100 ms, and each component will receive a Time.deltaTime value of 0.1 every Update.

By decoupling the simulated clock from the wall clock, we can ensure the simulation runs as fast as it's able, rather than doing nothing while the simulation waits for enough real time to elapse.

Here we can see that although either the CPU or GPU thread may still bottleneck the simulation, we are guaranteed to generate one frame of useful data per Update and time in the simulation may move as slow or as fast as we need it to. This is one of the most fundamental advantages testing in simulation has over real-world testing. In the real world, if a test requires a vehicle to drive 100 ft across a factory floor at a rate of 10 ft/second, the test takes 10 seconds. In simulation, it might take 1 second instead, or less if the CPU/GPU workloads are minimal. At scale, and without any downtime needed to reset a physical testing space to its original conditions, a years worth of physical testing could easily be completed every month. To learn how to configure your simulation to take advantage of a non-realtime clock, please continue on to our Overview page.