Lidar segmentation

Lidar segmentation is implemented with extensive use of the Perception package. It's recommended to go through the tutorial for perception package before beginning to work with lidar segmentation.

To enable lidar segmentation, add the Perception package to your project, then attach Label components to GameObjects in your scene. The PathtracedSensingComponent in the scene will automatically use the label information to render a unique ID for each ray, which corresponds to the object hit by the ray.

Note

You must install the Perception package in your project to perform lidar segmentation.

Note

You can import the Perception library from the SensorSDK samples to access a lidar prefab and a demo scene demonstrating lidar segmentation.

Warning

Installing the perception package and running it the first time may prompt an error regarding a misconfigured output endpoint. This error has no effect on the lidar segmentation. To remove the error: select Edit > Project Settings > Perception, and change the active endpoint to No Output Endpoint or Perception Endpoint.

Warning

Lidar segmentation requires a PathtracedSensingComponent. A Camera-Based Lidar solution isn't supported yet.

Convert lidar output to labels

To convert the unique ID to the required format using the corresponding label configs, you can use one of two nodes: PhotosensorToInstanceSegmentation v2 or PhotosensorToSemanticSegmentation v2. Alternatively, you can also use the raw data directly from the Photosensor v2 or Frame Bundler node output.

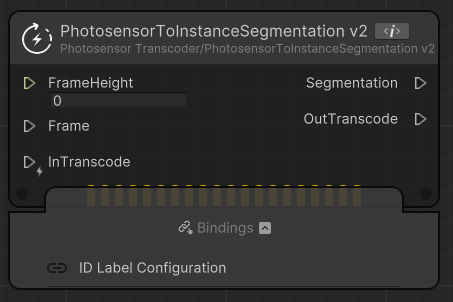

PhotosensorToInstanceSegmentation v2

This node accepts the raw output from the Frame Bundler, and encodes the photosensor raw data into a 2D texture according to a specified IdLabelConfig, provided by the Perception package. The label config translates between the raw unique ID randomly assigned per label component and the specified ID for each GameObject. The node has the following parameters:

- FrameHeight: The number of beams in the vertical arrangement, used as output texture height.

- Frame: The output from the Frame Bundler node.

- ID Label Configuration (binding): The corresponding configuration to use for the mapping.

When the lidar completes a full sweep, the node outputs in Segmentation a 2D floating-point texture containing integers corresponding to the label IDs. To visualize the data, use a LUT Mapping node with a qualitative LUT, as it maps consecutive integers to distinct colors.

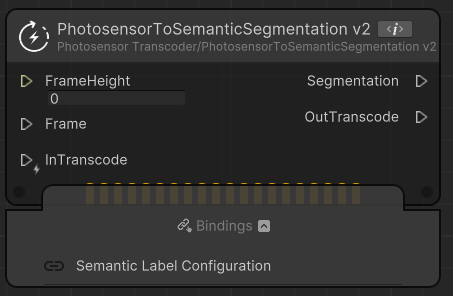

PhotosensorToSemanticSegmentation v2

This node uses the unique ID captured in the input Frame to generate a semantic segmentation texture. The class labels are specified using the SemanticSegmentationLabelConfig object available in the Perception package. The class labels are specified as colors corresponding to each class, so LUT mapping is not necessary to visualize the output. The node interface is as follows:

- FrameHeight: The number of beams in the vertical arrangement, used as output texture height.

- Frame: The output from the Frame Bundler node.

- Semantic Label Configuration (binding): The corresponding configuration to use for the mapping.

When the lidar completes a full sweep, the node outputs in Segmentation a 2D ARGB32 texture.